As the 2020 Presidential election cycle (with the notable exceptions of two Senate runoffs in Georgia and Congressional certification of the Electoral College vote for President) concludes, the discussion that occurs every four years of whether pollsters “missed the mark” on the Presidential election results has returned front and center to the political discourse. Let’s look at the data to evaluate this supposed nugget of conventional wisdom.

As with a similar analysis JMC performed in January 2017, JMC is interested in seeing both (1) how well the polls predicted the national popular vote, and (2) how well the polls predicted the vote in each of the swing states. In performing this analysis, JMC used both certified election results and publicly released polls available on Fivethirtyeight.com for the final seven days before Election Day.

ANALYSIS #1: NATIONAL POLLING

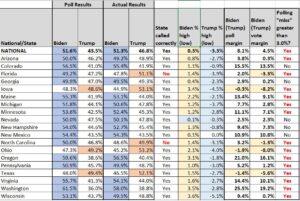

Even though the national popular vote (thanks to the Electoral College) does not truly matter in the election of a President, national polls are nevertheless a frequently used metric to evaluate which candidate is ahead/behind, or headed in either direction. An examination of the average of all polls where fieldwork concluded between October 27 and November 2 (in other words, the last week before Election Day), Joe Biden led Donald Trump 51.6-43.5%, or by an eight point margin. The final national popular vote was 51.3% for Biden and 46.8% for Trump, or a 4.5% margin. In other words, the same polls that were almost dead on with regards to the Biden percentage (overstating it by only 0.3%), understated the Trump vote by 3.3%.

ANALYSIS #2: SWING STATE POLLING

What actually matters in terms of electing a President is the vote from each of the 50 states, and that vote (with some nuances, like the Congressional district vote in Maine and Nebraska) is represented by a candidate having a slate of electors in a “winner take all” format for each state. JMC examined that vote in each of 19 states not considered to be securely Democratic/Republican, then compared those results against polling averages for each state where polling fieldwork concluded between October 27 and November 2.

Similar criteria was used by JMC in 2016 to evaluate poll quality back then, and last week polls incorrectly called the winner in five (out of 19) states: Florida, Michigan, Nevada, Pennsylvania, and Wisconsin. This time, two states out of 19 were incorrectly called – Florida and North Carolina (“incorrectly called” meaning that the polling average for the last week before Election Day showed Biden ahead, when Trump ended up winning those states). By that metric, polling was MORE (and not LESS) accurate than it was in 2016.

The greater issue in 2020, however, was the extent to which the polls consistently understated the Trump vote and NOT the Biden vote. In only one “swing” state (Nevada, and even then, by only 0.1%) was the Biden vote understated (it was overstated everywhere else – in Wisconsin, the overstatement was as high as 3.6%), while the Trump vote was only accurately predicted in one state (New Mexico). In the remaining 17 states, the Trump vote was understated by anywhere between 0.3% (Nevada) and 5.1% (Wisconsin). Such an understatement created the false impression that Biden was going to win North Carolina and Florida. Similarly, this understatement created the false expectation of Iowa, Ohio, and Texas being flippable states (in the end, Trump carried them by 6-8 points). Finally, the “blue wall” states of Michigan, Pennsylvania, and Wisconsin ended up being much closer than pollsters originally thought, with Biden last week poll margins of 5-9 points ending up being 1-3 point victory margins once the votes were all counted. Under this metric, polls were noticeably LESS accurate than they were in 2016: four years ago, 11 out of 18 “swing” states missed their mark by more than 3% (a margin of error JMC sees as appropriate for state level polls); in 2020, the “miss” occurred in 14 out of 19 “swing states” (as well as nationally)

CONCLUSION

The problem with polls this year was a consistent under polling of the Trump vote, and that underpolling was NOT the case with the Biden vote. This means more creative ways to determine voter intent with controversial candidates (such as indirectly asking about candidate support) need to be considered as part of the pollster’s “toolbox.”